Chapter 9 Dimensionality reduction

Let \(X_1,...,X_n\in\mathbb{R}^p\) be our high-dimensional data. We want to project the data onto a lower-dimensional space, that is we want \(q<<p\) and \(f:\mathbb{R}^p \mapsto \mathbb{R}^q\) such that \(||f(X_k)-f(X_l)|| \approx ||X_k-X_l||\) – the distance between any two points in the lower-dimensional space should be close to their corresponding distance in the original space. We can respecify the problem as

\[ \begin{aligned} && \max_{k,l\le n}\left| \frac{||f(X_k)-f(X_l)||^2}{||X_k-X_l||^2}-1\right|&\le \varepsilon\\ \end{aligned} \]

where \(\varepsilon\) is some desired accuracy.

9.1 Random projections

Let \(f(X)=WX\), \(X\in\mathbb{R}^p\), \(W\) \((q\times p)\) and for the elements of \(W\): \(w_{ij} \sim \mathcal{N}(0, \frac{1}{q})\) are idd. First note that

\[ \begin{aligned} && \mathbb{E}||f(X)||^2&= \mathbb{E} ||W X||^2= \mathbb{E} \sum_{i=1}^{q} \left( \sum_{j=1}^{p} X_jW_{i,j}\right)^2\\ && &= \sum_{i=1}^{q}\mathbb{E} \left( \sum_{j=1}^{p} X_jW_{i,j}\right)^2= \sum_{i=1}^{q} \frac{||X||^2}{q}\\ \end{aligned} \]

where the last equality follows from \(\sum_{j=1}^{p} X_jW_{i,j} \sim \mathcal{N}(0, \frac{1}{q}\sum_{j=1}^{p} X_j^2 ) = \sim \mathcal{N}(0,\frac{||X||^2}{q})\). Then finally:

\[ \begin{aligned} && \mathbb{E}||f(X)||^2&=||X||^2 \\ \end{aligned} \] Now in particular for any \(i,j\le n\)

\[ \begin{aligned} && \mathbb{E}||f(X_k)-f(X_l)||^2&= \mathbb{E}||W(X_k-X_l)||^2=||X_k-X_l||^2 \\ \end{aligned} \]

Now for the actual proof:

This is quite amazing since the final result is independent of \(p\).

Let \(p=10^{6}\) and \(n=100\). Then with probability \(0.9\) we have

\[ \begin{aligned} && \max_{k,l\le n}\left| \frac{||f(X_k)-f(X_l)||^2}{||X_k-X_l||^2}-1\right| &\le 0.01 \\ \end{aligned} \]

whenever \(q\ge2.48585\times 10^{5}\).

9.2 PCA

Another common way to reduce model dimensionality is through principal component analysis (PCA). Very loosely defined principal components can be thought of as describing the main sources of variation in the data. While in theory any design matrix \(X\) \((n \times p)\) can be decomposed into its principal components, in practice PCA can be more or less useful for dimensionality reduction depending on how the \(p\) different features in \(X\) related to each other. We will see that in particular for highly correlated data PCA can be an extremely useful tool for dimensionality reduction.

9.2.1 The maths behind PCA

PCA projects \(X\) into a \(q\)-dimensional space where \(q \le p\), such that the covariance matrix of the \(q\)-dimensional projection is maximised. Intuitively, we want to find the linear combination of points in \(X\) which explains the largest part of the variance in \(X\). Formally (in a very stylised fashion) this amounts to

\[ \begin{aligned} && \max_a& \left( \Omega = P_1^TP_1 = v^TX^TXv = v^T \Sigma v \right) \\ \text{s.t.} && v^Tv&= \mathbf{1} && \text{(loading vector)} \\ \text{where}&& P_1 &= v^T X && \text{(principal component)} \\ \end{aligned} \]

where \(v\) is given by the eigenvector corresponding to the largest eigenvalue of \(\Sigma\) - the covariance matrix of \(X\). We can eigen-decompose \(\Sigma\)

\[ \begin{aligned} && \Sigma&= V \Lambda V^{-1} \\ \end{aligned} \]

where \(\Lambda\) is a diagonal matrix of eigenvalues in decreasing order.

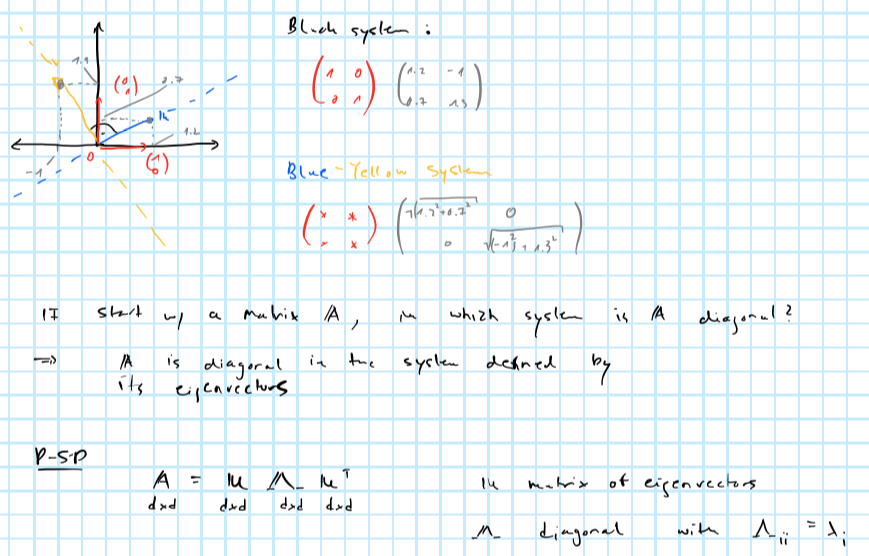

Sometimes eigenvectors \(V\) are referred to as rotation vectors: the first eigenvector - i.e. the one corresponding to the highest eigenvalue - rotates the data into the direction of the highest variation. Remembers this image from the brush-ups?

Projecting into orthogonal subspace

9.2.2 An intuitive example

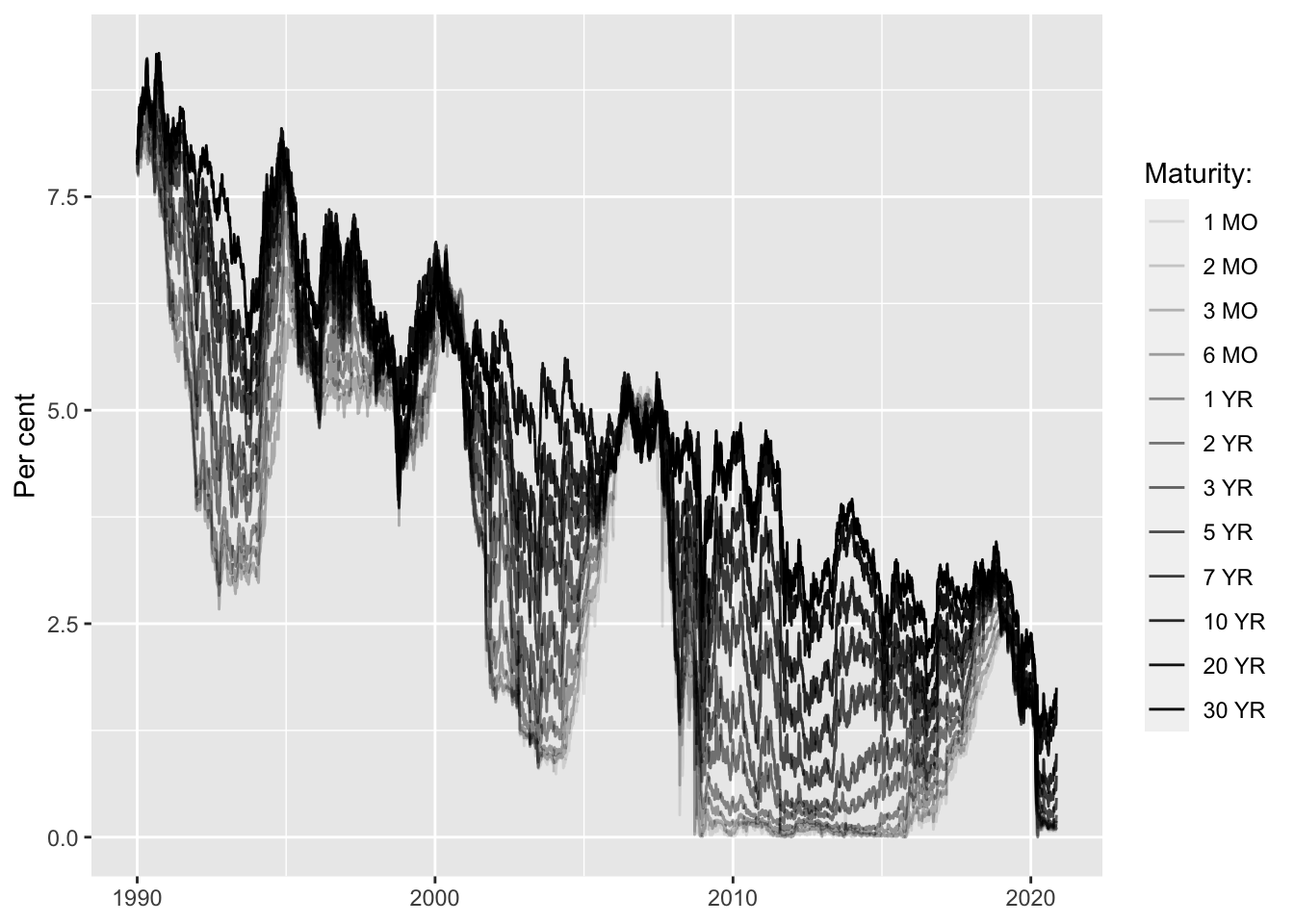

PCA can be applied very well to highly correlated time series. Take for example US treasury yields of varying maturities over time: they are intrinsically linked to each other through the term-structure of interest rates. As we will see the first couple of principal components have a very intuitive interpretation when applied to yield curves.

Let’s perform PCA through spectral decomposition in R and look at the output.

| percent |

|---|

| 98.0788860 |

| 1.6079986 |

| 0.3004019 |

| 0.0064192 |

| 0.0027590 |

| 0.0014110 |

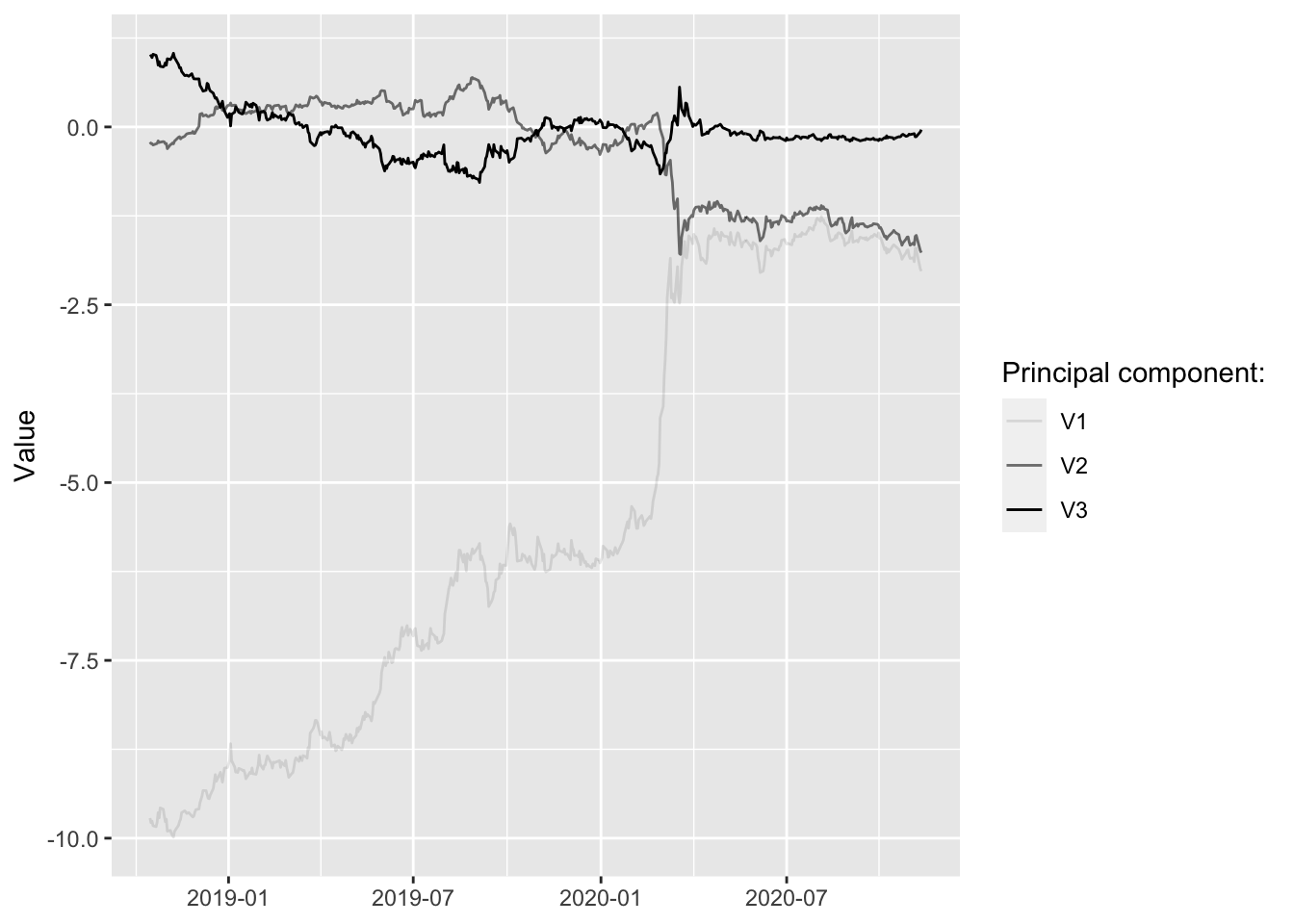

Let’s compute the first three principal components and plot them over time. How can we make sense of this? It is not obvious and in many applications interpreting the components at face value is a difficult task. In this particular example it turns out that we can actually make sense of them.

Let’s see what happens when we play with the components. (Shiny app not run in static HTML)

9.3 PCA for feature extraction

9.3.1 Squared elements of eigenvectors

Consider again using the spectral decomposition of \(\Sigma=\mathbf{X}^T\mathbf{X}\) to perform PCA:

\[ \begin{aligned} && \Sigma&= V \Lambda V^{T} \\ \end{aligned} \]

A practical complication with PCA is that generally the principal components cannot be easily interpreted. If we are only interested in prediction, then this may not be a concern. But when doing inference, we are interested in the effect of specific features rather than the principal components that describe the variation in the design matrix \(\mathbf{X}\). It turns out that we can still use spectral decomposition to select features directly. The clue is to realize that the \(i\)-th squared element \(v^2_{ji}\) of the \(j\)-th eigenvector can be though of as the percentage contribution of feature \(i\) to the variation in the \(j\)-th principal component. Having already established above that eigenvalues provide a measure of how much of the overall variation in \(X\) is explained by the \(j\)-th principal component, these two ideas can be combined to give us a straight-forward way to identify important features (for explaining variation in \(\mathbf{X}\)). In particular, compute \(\mathbf{s}= \text{diag}\{\Lambda\}/ \text{tr} (\Lambda)\) \((p \times 1)\) where \(\Lambda\) is diagonal matrix of eigenvalues and let \(\mathbf{V}^2\) be the matrix of eigenvectors with each elements squared. Consider computing the following vector \(\mathbf{r} \in \mathbb{R}^p\) which will use to rank our \(p\) features:

\[ \begin{equation} \begin{aligned} && \mathbf{r}&=\mathbf{V}^2 \mathbf{s} \\ \end{aligned} \tag{9.1} \end{equation} \]

By construction elements in \(\mathbf{r}\) sum up to one so they can be thought of as percentages describing the overall importance of individual features in terms of explaining the overall variation in the design matrix. This last point is important: PCA never even looks at the outcome variable that we are interested in modelling. Even features identified as not important by PCA may in fact be very important for the model, so the ranking is merely a guideline of sorts.

Then say we were interested in decreasing the number of features from \(p=12\) to \(q=5\), then this approach suggests using the following features:

| feature | importance |

|---|---|

| 30 YR | 0.1328828 |

| 20 YR | 0.1146219 |

| 10 YR | 0.0887639 |

| 7 YR | 0.0803942 |

| 6 MO | 0.0751135 |

9.3.2 SVD

Instead of using spectral decomposition we could use (compact) SVD for PCA. Compact SVD works decomposes any matrix \(X\) \((n \times p)\) as follows

\[ \begin{aligned} && X&= U S V^{T} \\ \end{aligned} \]

where \(S\) is diagonal \((p\times p)\), \(U\) is \((n \times r)\) and \(V\) is \((r \times p)\) with \(r=\min(n,p)\). The diagonal elements of \(S\) are referred to as singular values (Note: it turns out that they correspond to the square roots of the eigenvalues of \(X^TX\)). It is then easy to see that \(V\) is once again the matrix of eigenvectors of \(X^tX\) as above

\[ \begin{aligned} && \Sigma&=X^TX=(V^TS^TU^T)US V \\ && &= V(S^TS)V^T=V\Lambda V^T\\ \end{aligned} \] where \(U^TU=I\) by the fact that \(U\) is orthogonal and from the note above it should be clear why \(S^TS=\Lambda\).

Unsurprisingly this gives us the equivalent ranking of features:

| feature | importance |

|---|---|

| 30 YR | 0.1491249 |

| 20 YR | 0.1188201 |

| 10 YR | 0.0816704 |

| 1 MO | 0.0778362 |

| 2 MO | 0.0750598 |

9.4 High-dimensional data

Now that we have developed a good intuition of PCA, it is time to face its shortfalls. While PCA can be used as tool to reduce dimensionality it is actually inconsistent for cases where \(p>>n\). Enter: regularized SVD.

9.4.1 Regularized SVD

Witten, Tibshirani, and Hastie (2009) propose a regularized version of SVD where the \(L_1\)-norm eigenvectors \(\mathbf{v}_k\) is penalized. In particular the authors propose a modified version of the following optimization problem:

\[ \begin{equation} \begin{aligned} && \max_a& \left( \Omega = P_1^TP_1 = \mathbf{v}^TX^TX\mathbf{v} = \mathbf{v}^T \Sigma \mathbf{v} \right) \\ \text{s.t.} && \mathbf{v}^T\mathbf{v}&= \mathbf{1} \\ && |\mathbf{v}|& \le c \\ \end{aligned} \tag{9.2} \end{equation} \]

Note that this essentially looks like spectral decomposition with an added LASSO penality: consequently depending on the regularization parameter \(c\) some elements of \(\mathbf{v}_k\) will be shrinked to exactly zero and hence this form of penalized SVD is said yield sparse principal components. The optimization problem in (9.2) - originally proposed Jolliffe, Trendafilov, and Uddin (2003) - is non-convex and hence computationally hard. Witten, Tibshirani, and Hastie (2009) propose to instead solve the following optimization problem:

\[ \begin{equation} \begin{aligned} && \max_a& \left( \mathbf{u}^TX\mathbf{v} \right)\\ \text{s.t.} && \mathbf{v}^T\mathbf{v}&= \mathbf{1} \\ && \mathbf{u}^T\mathbf{u}&= \mathbf{1} \\ && |\mathbf{v}|& \le c_2 \\ \end{aligned} \tag{9.3} \end{equation} \] This looks like singular-value decomposition with LASSO penality on \(\mathbf{v}\). Discussing the implementation of their proposed algorithm could be interesting, but is beyond the scope of this. Fortunately the authors have build an R package that conveniently implements their algorithm.

Let’s generate a random matrix with \(n=50\) and \(p=1000\) and see if we can still apply the ideas developed above for feature extraction. It turns out we can proceed pretty much exactly as before. Once again we will pre-multiply by \(\mathbf{V}^2\) to obtain a ranking in terms of feature importance. Here it should be noted that we would not generally compute all \(p\) principal components, but focus on say the first \(l\). Of course for \(l<p\) they will never not explain the total variation in \(X\). In that sense our ranking vector \(\mathbf{r}\) now ranks features in terms of their contribution to the overall variation explained by the first \(l\) sparse principal components. Of course for many features that contribution will be zero, since the LASSO penalty shrinks some elements in \(\mathbf{V}\) to zero. Hence some selection is already done for use, but we can still proceed as before to select our final set of \(m\) features.

| feature | importance |

|---|---|

| 2 | 0.2665423 |

| 225 | 0.1836013 |

| 241 | 0.0957684 |

| 135 | 0.0896406 |

| 80 | 0.0772818 |

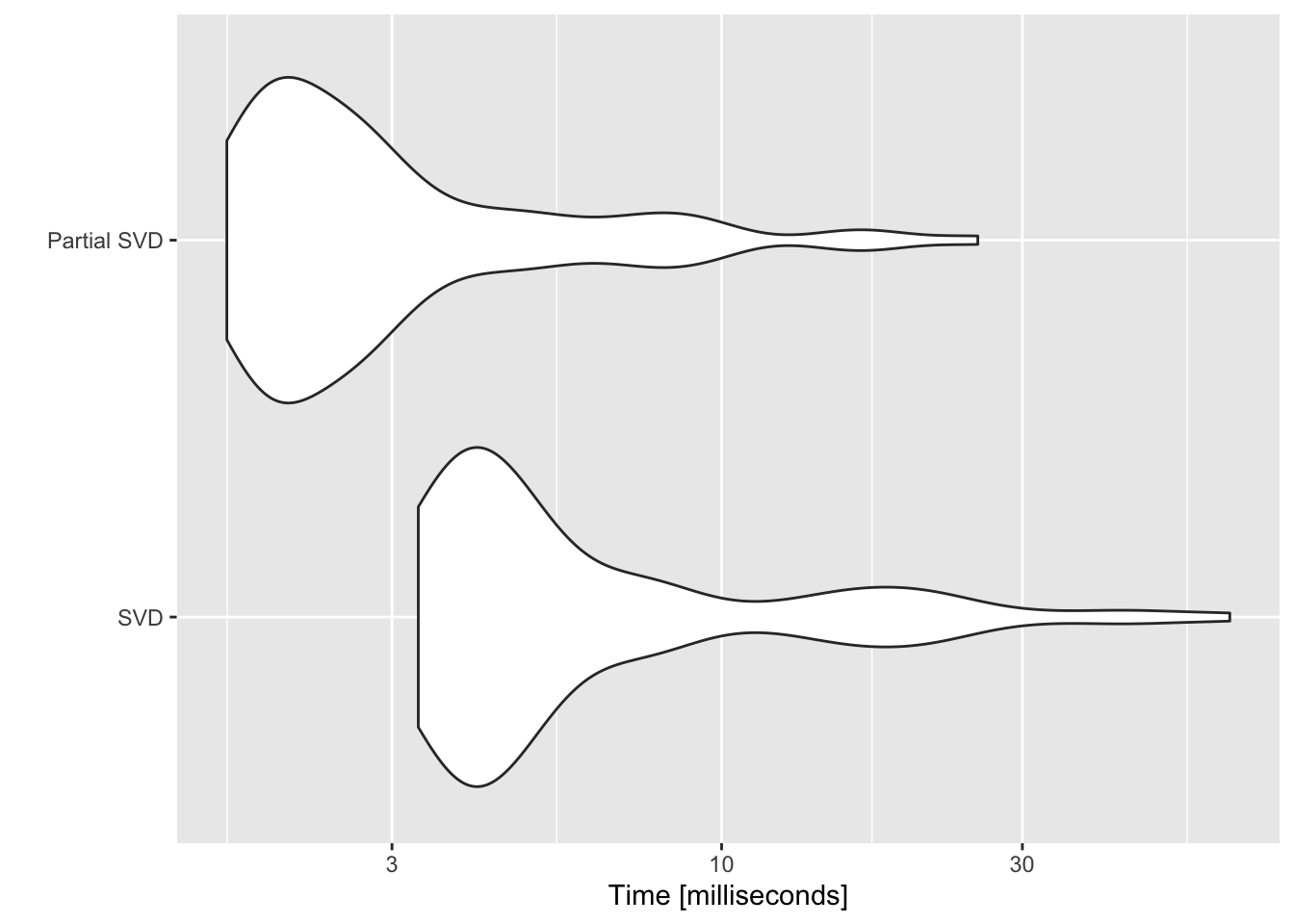

9.4.2 Fast, partial SVD

9.5 Forward search

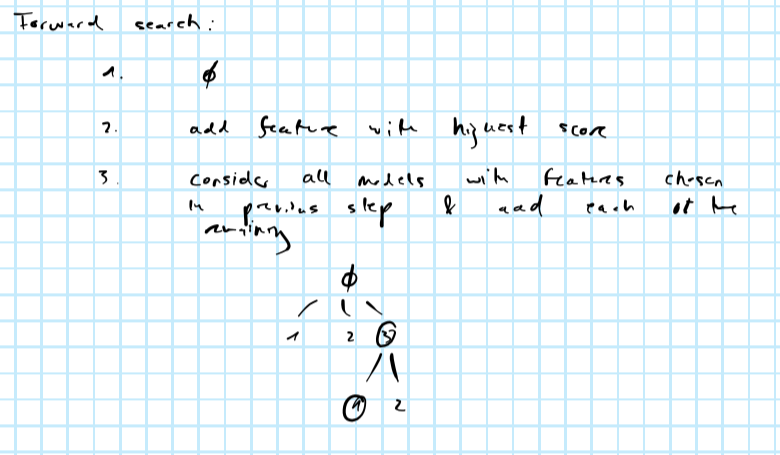

An interesting idea could be combine the above methods with forward search:

Forward search

As per the image above, at the \(k\)-th step forward search requires fitting the model \((p-k)\) times to compare scores. A good idea might be to use the ranking of features obtained from PCA and only fit the model for the \(l<(p-k)\) most important features at each step. The below summarizes the idea:

- Run PCA to rank features in terms of their contributions to the overall variation in the design matrix \(X\).

- Run a forward search:

- In the first step fit the model for the \(l\) most important features (ranked by PCA). Choose the feature \(x_j\) with highest score and remove it from the ranking.

- Proceed in the same way in the following step until convergence.

Note that convergence is somewhat loosely defined here. In practice I would look at how much the model improves by including an additional features (e.g. how much the likelihood increases or the MSE decreases). For that we would need to define some threshold value \(\tau\) which would correspond to the minimum improvement we would want to obtain for including another feature (but strictly speaking that is a hyperparameter, so if you wanted to avoid those altogether I would just rely on the ranking methods proposed above).